The Singularity Nonevent; or,

What Makes Us Human?

If we were all Ms. Brights, we could throw away those mega-vitamins. We’d get younger every time we came home. But we’d have a different set of problems, we’d never get to grow up.

Stumbling Into the Fray

I became aware of the boundary-pushing futurists accidentally. I was reading the Wall Street Journal and thinking about the connections between global finance, technology, and job loss, and how they have taken so many people unawares and left them stunned. I used to edit professional books, many of them in finance, and I've grown antennae for volatile economic headwinds.

I also love astronomy. Finance and astronomy have taken me to some strange places, but none as unusual as the conundrum that much-needed computer science talent is being diverted into an inquiry into reprogramming our humanity, mapping our neurology and biology as blueprints to change ourselves.

Photograph by Frances Stevens.

It started with Alan Lightman’s beautiful little book, The Accidental Universe. In his final chapter he talks about the effect of the unseen world on our understanding of reality—how quantum physics has revealed a world "almost unfathomable from our common understanding" of it (p.135). How ironic, he says, that the science which has brought us closer to nature by revealing the natural world to us is also separating us from it. Quantum mechanics, the science behind the technologies that drive our devices, is getting us used to experiencing the world a step away from our sensory reality. Will seeing a real snake in the woods someday be considered a less desirable or inferior experience to seeing its picture blown up on a screen? Will we prefer a disembodied self to what we have now, even if that means losing some of our human traits?

Then I found Jaron Lanier’s Who Owns the Future? Lanier is a computer scientist with a clear writing style who is at home in the real world. I didn't know who he was and thought I was buying an economics book. His concern for the financial dignity of working people in the face of disruptive technologies is in line with my own thoughts. It was toward the end of his book that I learned about the futurists' obsessions.

I started to read them and listen to their videotapes. Oh what a Pandora's Box I opened for myself. I had to put on my editor's hat. I've slogged through many a messy manuscript on subjects I wasn't an expert in; it's like putting together a puzzle. Only this puzzle has parts that fit and parts that don't.

Some of the futurists look forward to extended lifespans of 1000 years or more. Others predict a scenario in which our machines will become so super-intelligent that intelligence mayhem will ensue if we don't start now on a plan to thwart it. Some even say we can one day soon change our status with respect to the universe, now that we know so much about the natural laws that underpin it. This latter idea depends on duplicating the human brain through reverse engineering.

In their eyes, technology not only holds promise for medical intervention and greater ease of task performance, it augurs potential changes to our species. It's not apples and oranges, it's apples and silicone.

At St. Joe's, my elementary school, I learned about the universe, and I learned about heaven. It was the early 1960s, the Russians had an aggressive space program, and our rote classical education system intended to keep up. I was taught by nuns, women who did nothing but teach, and some of them were brilliant. It was my own little Harvard.

I loved thinking about our earth and moon spinning on their axes, orbiting the sun, in constant motion. I stood outside my house with my arms straight out. Why wasn’t I dizzy? Did my hands or fingers wobble? The planetarium in the museum near our house was a favorite Saturday hangout. I wanted to get the constellations right so that on a good night I could lie in my backyard and find them (The Big Dipper!! I see it!!).

But where was heaven? The Sisters of St. Francis, who taught us, were a math and science order, and their approach to religion as I look back on it was philosophical. Catholicism’s gentle side (kindly Jesus, the Blessed Mother) was foremost, but by sixth grade, religion class was getting somewhat profound.

We learned that God didn’t interfere in the workings of his creatures on earth even though he knew all of our actions past, present, and future. For God, there was only the eternal present (a quantum field-like theory, you might say). Our time was different from eternity, we lived and had free will in this time, and our conscience and sense of responsibility could be tested.

So was heaven past Neptune and Pluto (so cold there!)? Was it on the other side of the universe? Where was our soul? In heaven you live forever. Your body will have no no biological needs, no urgencies; it will be your perfect body, one with your soul, in everlasting joy of the sight of God.

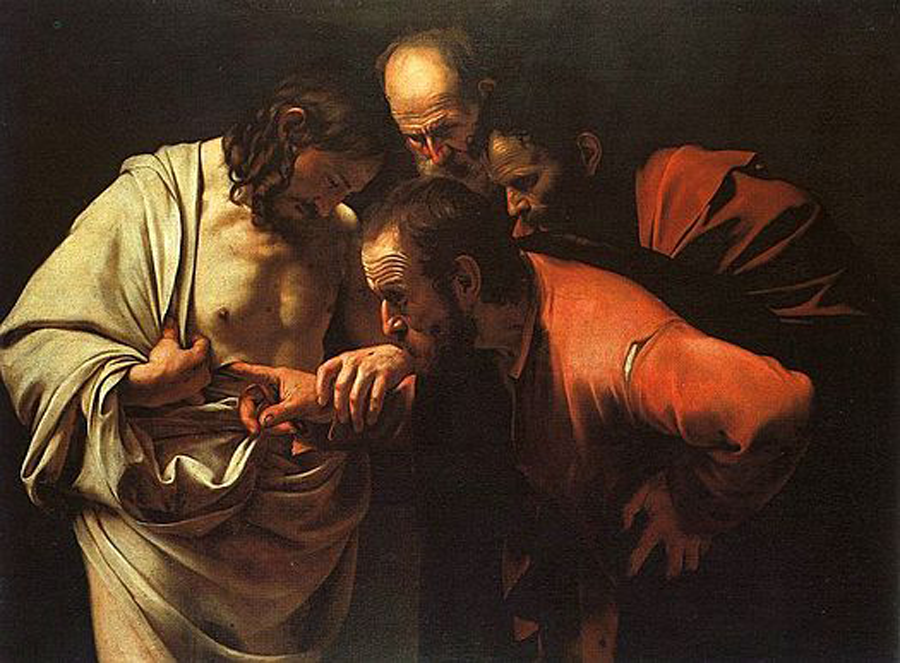

Housed in Sanssouci Palace, Berlin. Public domain

image via Wikimedia Commons.

I knew when I learned about the apostle called “doubting Thomas” that I was like him. Thomas wanted proof of Christ's resurrection; he had to put his hands through the wounds to prove this was the real Christ. But that was okay. Christ hand-picked him as one of his twelve, knowing he would doubt.

I felt guilty for being uncomfortable with the idea of living forever in heaven, where there is eternal joy— what if I got tired, living forever?

Our Whirling Tiny Particle

Sean Carroll, a theoretical physicist at the California Institute of Technology, writes in the beginning of his book The Big Picture that since people started thinking, they have contemplated their reality with respect to the universe:

[T]here has been a shared view that there is some meaning, out there somewhere, waiting to be discovered and acknowledged. There is a point to all this ... This conviction has served as the ground beneath our feet, as the foundation on which we’ve constructed all the principles by which we live our lives. (Big Picture, p.9)

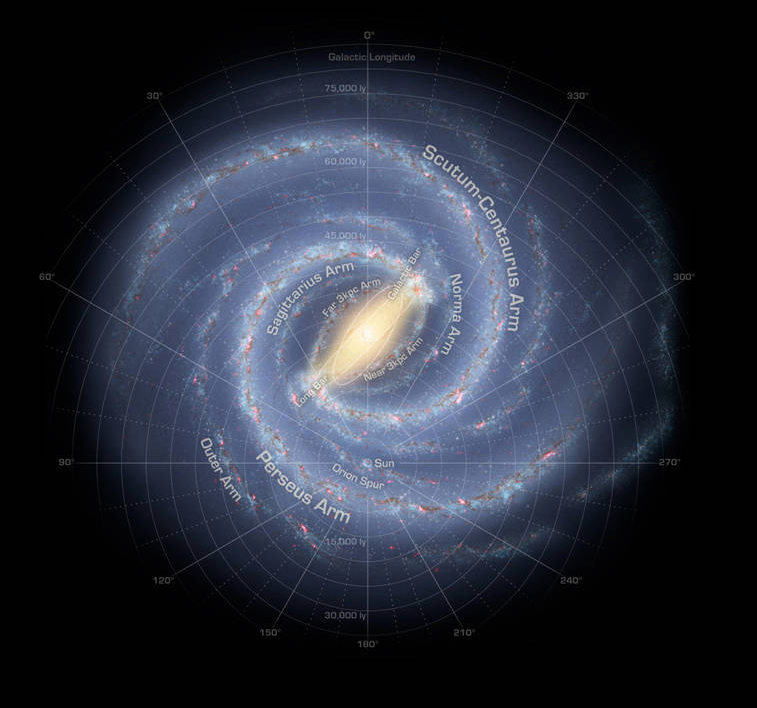

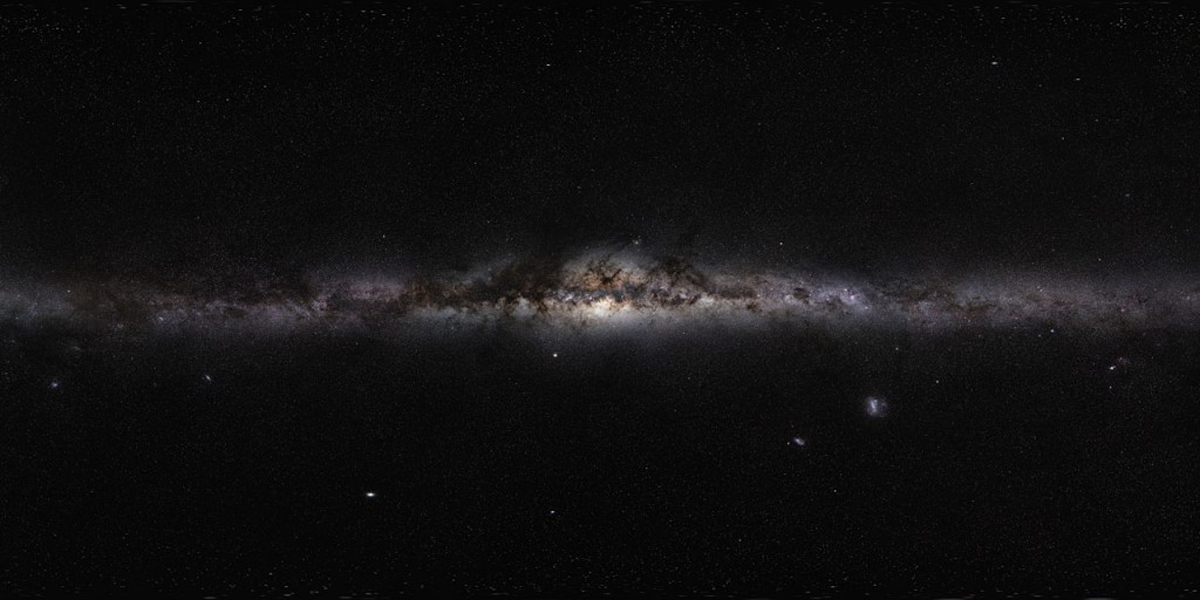

What is our human relationship to the universe? The first picture below pinpoints the position of our rather small sun at the 270 degree mark (third line on the right) within the Milky Way galaxy:

According to NASA, there are between 100 and 400 billion stars sharing the space with us (see space.com for how this number is calculated).

The extraordinarily beautiful next image of our Milky Way Galaxy is a 360-degree panorama taken by the photographer Serge Brunier in 2009, the International Year of Astronomy, with assistance by the European Southern Observatory.

This 2004 pdf gives a thorough description of the galaxy.

But we’re still not in the ball park. The following picture, taken by the NASA/ESA Hubble Space Telescope, shows “a legion of galaxies,” thousands of galaxies moving through space, and our Milky Way is nowhere in sight, except for a few stars in the foreground. We're getting smaller and smaller in this view, less rather than more cosmically significant.

The above images are all exquisite. They rally our thought processes. If you go to NASA's image gallery you will see its photos of the eerie beauty of deep space, moving inexorably according to its own laws.

Richard Feynman has described this landscape and our place in it in plain astrophysics English :

The size of the universe is very impressive, with us on a tiny particle that whirls around the sun. That's one sun among a hundred thousand million suns in this galaxy, itself among a billion galaxies. (The Meaning of it All, p.38, emphasis mine)

And Carroll is correct, seeking out the universe and our place in it is a human compulsion. Like James Joyce's young Stephen Dedalus writing his address in his Class of Elements at Clongowes School (Portrait of the Artist as a Young Man, p.15), I wrote my address at St. Joe's:

Lancaster

Pennsylvania

United States

Western Hemisphere

Earth

Universe

As constricting as it may seem to some, our local address is planet Earth, our tiny whirling particle. Our powerful machines and rockets are like little toys in the universe. We may make field trips to Mars someday; we probably will. But we'd get quickly demolished trying to take a safari through deep space.

Here's the rub: we're not just any old interchangeable collection of atoms, we're a very specific collection that is the human species, "thinking and feeling people who bring meaning into existence by the way we live our lives" (Big Picture, p.9). Now that's a responsibility, to bring meaning into existence.

Back to the Futurists

The futurists are believers, goal-oriented believers in our human destiny. They give dates for the events they foresee. (Their desires are touching in their own way.) The most compelling unlikely event is the creation of machines with human consciousness. I'm no computer scientist; we're all somewhere in the cloud; our consciousness leaves bits of itself there, to some extent. But think about it. While certain machines might have a "machine consciousness," if you will, I'm unconvinced a machine will ever function like a human brain, much less a human person.

I had to go back to astrophysics and neuroscience (which I'm also not expert in but do love), where my editor's brain knew it was reading clean science.

Computer scientists are very good at the things they are very good at. They are not experts in other fields. This latter misperception is an occupational hazard of some bright people in lots of fields.

I do want to be careful—the futurists are good people. But because technology dominates our lives in unseen ways, we should work at understanding as best we can the claims it is making on us and our humanity and how those claims may collide with our own expectations for ourselves.

What Are They Saying?

They're not all saying the same things, although they all base their theories on what they believe is sound reasoning. They've collected loyal, happy followers around the globe, but they've also gotten the attention of world leaders who know they must keep up with the new technologies and the issues they give rise to.

Aubrey de Grey

Aubrey de Grey, the biomedical gerontologist and chief science officer of Strategies for Engineered Negligible Senescence (SENS), which he founded, is working on his path to eternal youth and health via the "engineering approach." This method aims to keep the body forever below the threshold point at which aging begins (before the onset of cellular and molecular senescence).

De Grey states with authority that we are within striking distance of ending old age. Presently, he says, the first person to live to 1000 is only ten years younger than the first person to live to 150. To the biologists who express skepticism, he states they "better damn well" give good reasons for it, as he's written and talked quite a lot on this (de Grey, see References).

All we have to do to stem aging is figure out enough about the types of age-specific damage that we need to fix, in order to come up with rational designs of the necessary interventions. He says these things as though they were fairly simple and obvious. He mentions cancer as one such age-related scourge, yet cancer cells are in all of us all of the time, and children suffer from almost every kind of cancer.

To those who argue that greatly extended life is a selfish pursuit—what about having children?—he argues that a very low death rate will benefit future children and that there will be fewer children someday, anyway. Fertility rates are going down across the world; reverse-aging will end the problem of menopause and women could wait until age 70 or so to have their first child. To those who are concerned about a population crisis, he says that concern is "very overblown" and that if we continue to work on environmental issues as we should (a big if), the earth will be able to sustain more people with less environmental impact. (So much for children.)

Poets, like detectives, know the truth is laborious.

—Colum McCann, Thirteen Ways of Looking, p.42

Ray Kurzweil

The inventor Ray Kurzweil believes in the "computational theory of mind." The human brain runs like computer software, at once on different layers and in different combinations. He predicts that by 2030 we'll have reverse engineered the brain and constructed it digitally. He thinks the brain just isn't as complicated as some say it is. We'll not only duplicate the human brain, we'll make it better.

But he may be selling our brains short. The brain is dependent on interactions with its biological owner and its owner's environment, and the Kurzweil puzzle pieces, his ideas about brain structure, don't fit here. Our brain uses our sensory input—what we see, hear, touch, do, feel, etc.—as an organizing principle. Brain development is not only more complex than Kurzweil describes, but it's a complexly continuing process with no center of control. When you exercise your body, your brain is participating mightily. Your brain is making a heap of connections as you read this.

Kurzweil says the great advantage of being programmed will be the superior maintenance of our biological life (like in heaven?). Mortality will be in our hands. There will be no distinction between human and machine, physical and virtual reality. We'll upload data inclusive of the functions responsible for thought, feeling, emotion, and human interactions. He likes to emphasize in his writings and video appearances that our machines will have "emotional intelligence" (his words):

[O]ur technology will match and then vastly exceed the refinement and suppleness of what we regard as the best of human traits. (Singularity Is Near, p.9)

But software for "reading" emotions is not equivalent to emotional intelligence. Emotional intelligence is a perceptive skill applicable to a human relational field. It is not emotion. It is not having emotions, or knowing how to read them, or knowing about them. It is a skill that requires practice, whereby one understands in a finely tuned way the situation and experience (not the emotion, which is apparent) of another. It can be studied, tested, and improved upon, like other life skills. It's not about you unless you are using it on yourself, watching yourself, therapy in action, from a mental distance.

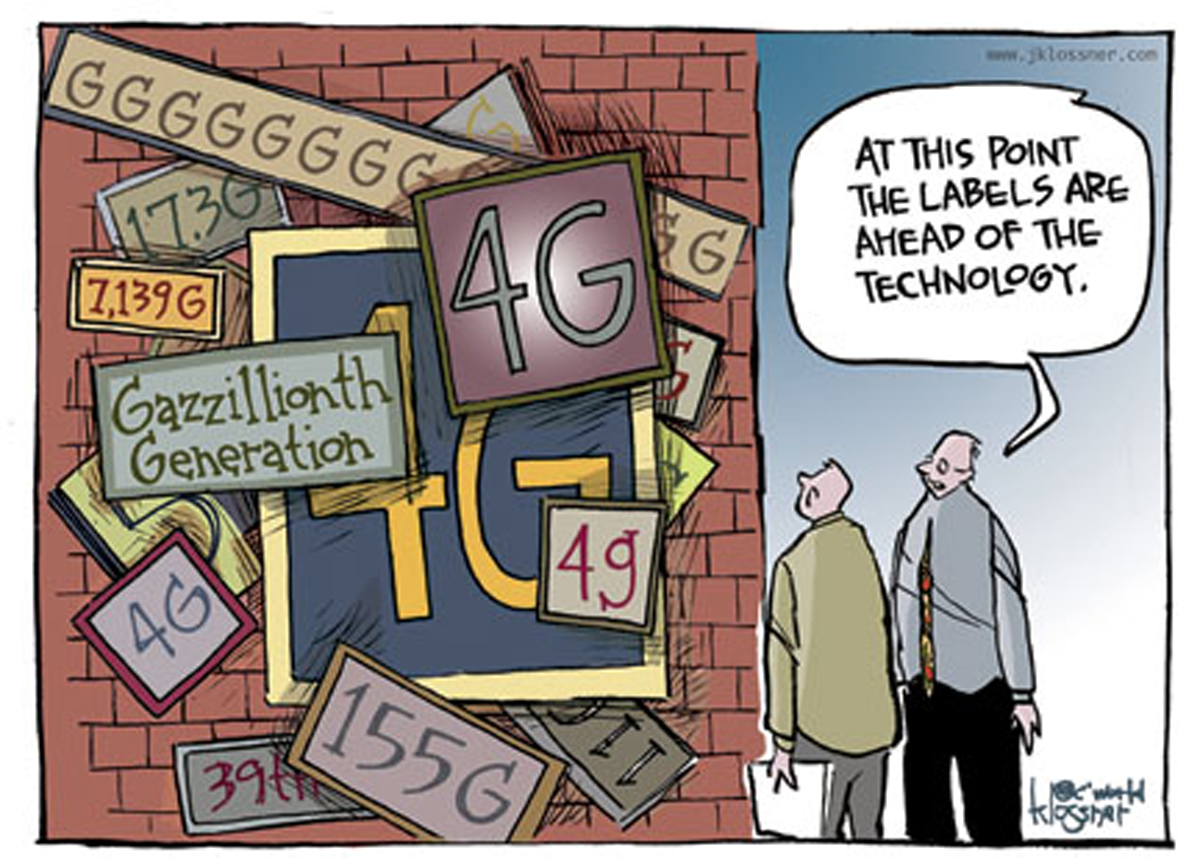

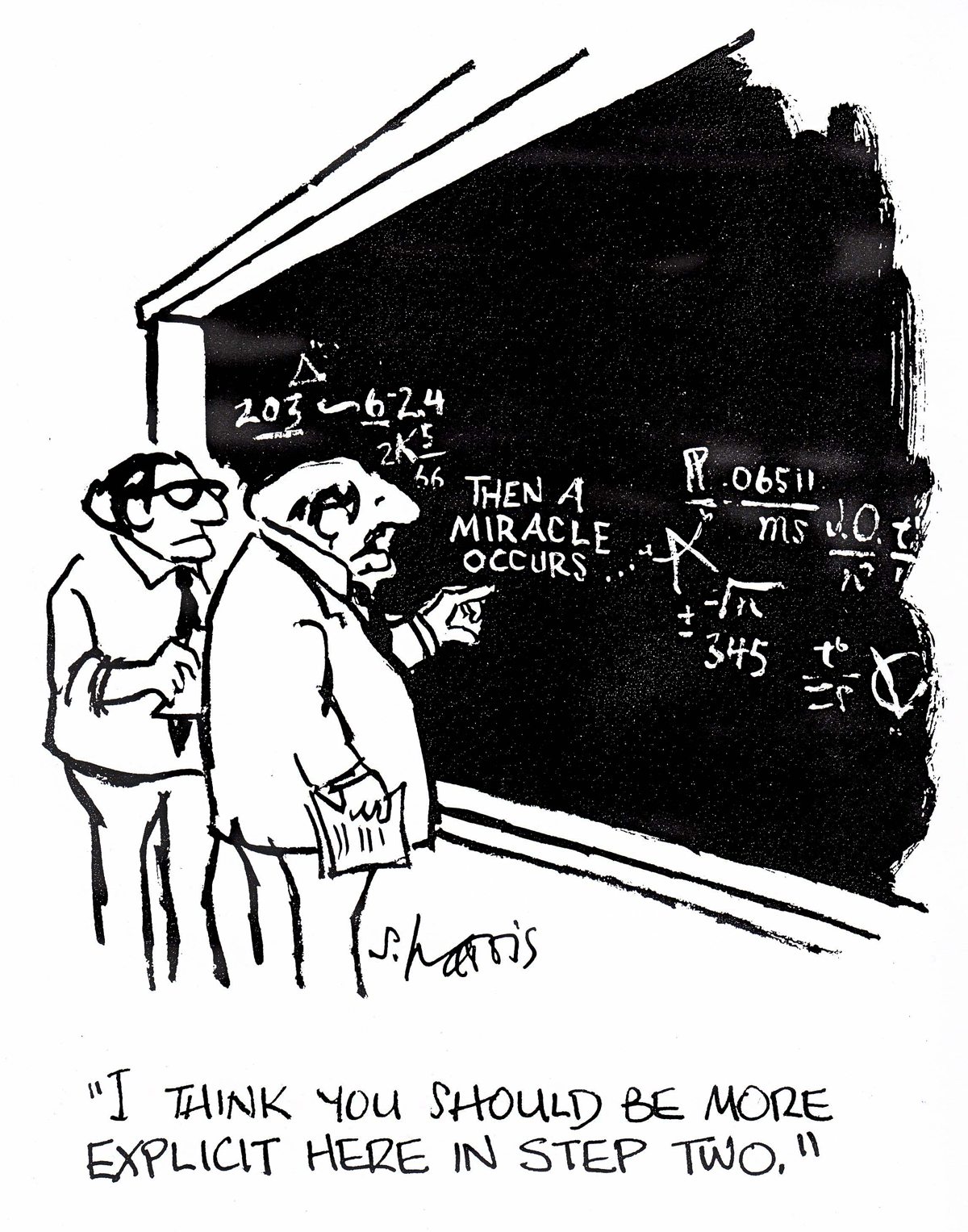

How will a digitized brain practice and study a skill like emotional intelligence? Or a skill like scientific investigation? With highly complex requirements for awareness, connections, and thinking, such skill development does not have set neural patterns. Digitized networks programmed to connect at the intersections of specific phrases or word patterns, for example, will not have at their disposal the huge and evolved memory bank of of a real brain. That is, they won't have connections to other words, phrases, memories, times, places, experiences, individuals, etc. that our biological brain associates with them. Scientific theorizing based on desire for a certain outcome is captive science, not free. John Klossner's cartoon (below) is a traffic jam of futurism.

Preloaded Brain Chips

The video below of astrophysicist Neil deGrasse Tyson interviewing Ray Kurzweil has a certain charm. DeGrasse Tyson tells Kurzweil that he wants to talk like they're "at a bar" and he brings a warmth to the conversation that Kurzweil reciprocates. It's nice to see, because in so many Kurzweil videos his monotone delivery is both run-on and sleep-inducing, like a good bit of his writing. But he did give us the charge-coupled flatbed scanner, omni-font optical character recognition, and print-to-speech reading for the blind, among other things. He wants the finer things in life, like we all do.

Yet in a revealing moment (6:33 min. ff), he tells deGrasse Tyson that having a preloaded chip of The Brothers Karamazov in his brain would really speed up his reading of the novel—otherwise "it could take months" to get through it (his emphasis). Months?

What about the experience of reading for its own sake, of falling into language as art, turning a page, stopping to think, going back and rereading, putting the book in your lap for a moment, processing your thoughts and feelings? It's good to know (as he states, 7:50 min. ff) that Kurzweil considers great novels to be in the top of the cloud hierarchy. He should take some time for Fyodor Dostoyevsky. He might be amazed.

Kurzweil believes some curious things. Virtual reality will compete with real reality, he says (real reality?), and with VR we’ll be different people both physically and emotionally. (What if we like ourselves as we are?) Our romantic partner will be able to change our body to his or her liking. The whole universe will get saturated with intelligence, at the maximum speed at which information can travel, and there may even be a way as yet undiscovered to move that information faster than the speed of light. We’ll have conquered celestial mechanics; things will move along fast!

Science is skeptical. Science teaches us to be our own theory’s harshest critics, to try to disprove our favorite ideas, which is counterintuitive but which helps us resist the lure of wishful thinking.

—Sean Carroll, ScienceNet 8:40 min.

Kurzweil seems not to notice any problem with the implications of his theories. We'll replace the past; we'll shelve the words of the novelist William Faulkner who cautioned that "The past is never dead. It's not even past" (Requiem for a Nun, Act 1, sc.3). We'll all be better off when the singularity occurs, when our old selves, our humanity as it's evolved over eons, is obliterated in favor of new—more vibrant, younger, smarter, healthier—selves.

Robot Molecules, Ultimate Dreams

In a recorded conversation of just a few months ago, Kurzweil talked with Robert Freitas, a “nanorobot theoretician” at the Institute for Molecular Manufacturing in Palo Alto. Mr. Freitas predicts that in 16 years, he and his co-workers should have a working desktop nanofactory. He states on the Foresight Institute website: "Once nanomachines are available, the ultimate dream of every healer, medicine man, and physician throughout recorded history will, at last, become a reality."

In the 2030s, nanorobots will displace other forms of nanotechnology and give us all “very long, extended lives.” Biological components are inherently slow, not as reliable as mechanical components, so we’ll replace the former with artificial white blood cells programmed to detect bad bacteria. We’ll have a nanorobot called a chromeposite that will “go through the tissues” to find the cells and “change out the chromosomes with newly manufactured ones that have been corrected” and then “dispose of the old genome,” said Mr. Freitas.

Kurzweil responded by saying he’d like to replace biological systems with engineered systems. But here we run into another piece of the puzzle that won't fit. What happens when our robotic bio-replacements wear out, get hacked, or unpredictably malfunction? My bigger question is, if it ain't broke, why fix it? (To get even messier, we could bring in Nassim Taleb, who in The Black Swan reminds us that predictions are usually wrong and the unforeseen event is a very real part of any equation.)

See also Kurzweil, How to Make a Molecular Nanobot

Take a potentially deadly bacterium like meningococcus. As explained to me by a physician friend, we all acquire this bacterium randomly periodically, albeit infrequently. The body’s immune system sees it as foreign, coats it with opsonims, makes humeral antibodies to it, and ingests it with macrophages, destroying it. The question is, how could you know ahead of time the different factors that predispose one person to getting the disease and dying within hours and another to not getting it at all? Some immune systems work better than others, and bacteria, viruses, and other germs are constantly mutating. Germs are promiscuous—they're constantly exchanging DNA.

Having listened to the Kurzweil-Freitas video, my physician friend said that some of Freitas’s work is potentially useful and he's obviously bright, but she pointed out problems with his applications. "The question isn’t how fast can you kill the deadly bacteria, but how can we know whether it's already resistant to an artificial, programmatic killing mechanism? Speak to any geneticist, oncologist, or infectious disease specialist, and they will quickly tell you that it is impossible to account for all possibilities," she said.

Linus Pauling, a great American scientist, won the Nobel Prize for Chemistry in 1954. He made important scientific discoveries in physical and structural chemistry, and in organic and inorganic chemistry, and used quantum theory and quantum mechanics to study atomic and molecular structure and chemical bonding. But in his later years he turned his interest to biochemistry, specifically the function of Vitamin C in the human body, and he wound up overstating its benefits.

He turned his focus to health foods, mega-vitamins, and dietary supplements which, among other things, he claimed could cure mental illness. None of his claims in these areas were ever born out by serious researchers, who undertook extensive studies on his behalf at the Mayo Clinic and elsewhere. Yet his mega-vitamin work is what he's now known for in the popular culture.

This fact comes to mind in reading Kurzweil, who is short on crucial medical and scientific specifics such as the role of neuroscience, chemistry, and biology, as well as the necessary contributions of surgeons, medical researchers, and technicians needed to bring his ideas to life. We'll need a ton of these professionals; they'll have to want to participate, and I don't even know how to envision the line-up of consumers.

And how will a trend to obliterate the biological and computerize human consciousness play out in a world where some people will no longer be people as we know them, and others will opt to retain their basic humanity? How will the two groups interact? Mr. Kurzweil is also short on explaining how the laws of physics, within the restrictive frameworks of local biological environments, will be worked around.

Monster Intelligence

Everybody knows Nick Bostrom, the founding director of the Future of Humanity Institute at Oxford University. He has captured the attention of technical luminaries like Bill Gates and Elon Musk as well as philosophers, world leaders, and military people. He too brings tidings of new life, but one not nearly as comforting as that of the medical futurists.

His book, Superintelligence: Paths, Dangers, Strategies, warns that our computers will one day be so powerful, so intelligent, that (1) they will be as smart as us, and (2) then surpass us, and (3) then proliferate on their own and, well, simply destroy us. This is "the most daunting challenge humanity has ever faced" (Superintelligence, p.v). He obviously cares about what happens to us humans and our lovely little blue planet. He's a decent person. But he too is buried in his machines; his delivery is machine-like; his monotone when he lectures like Kurzweil's—sleep-inducing.

The assumptions about machine intelligence go back to the Turing test, the so-called litmus test for computer intelligence: if there is no difference between human intelligent performance and computer performance, then the computer has human intelligence. Several singularity skeptics, for example John Searle and Luciano Floridi, note that Alan Turing titled his article, “Computing Machinery and Intelligence,” and that in his day a computer was a person, someone who computed for a living. Turing's own answer to the question "Can a machine think?" was that the question "is too meaningless to deserve discussion” (see Floridi, p.9). But Bostrom doesn't really consider Turing's assessment of his own machine.

If intelligent, then the next lightening-rod question is: can a machine have motivation, consciousness? This issue has gone to the academy; this is now a philosophical debate, whether or not it should be. John Searle, the Berkeley professor of the philosophy of mind and language, wrote that:

[T]he idea of superintelligent computers intentionally setting out on their own to destroy us, based on their own beliefs and desires and other motivations, is unrealistic because the machinery has no beliefs, desires, and motivations. (Searle, see References.)

Floridi wrote that Bostrom's kind of speculation is more about "true AI believers" than AI: "Think ... Data in Star Trek: The Next Generation (1987), Agent Smith in The Matrix (1999), or the disembodied Samantha in Her (2013)," he said (Floridi, p.8).

David Wood of the London Futurists group (see References) introduced Amnon Eden, a featured speaker, at one of their events by declaring that we are near the most important development in human history:

more important than the invention of the compass and navigation, ... the printing press, ... the development of the steam engine, the industrial revolution, possibly more important than the invention of nuclear weapons with their potential to destroy the earth. I'm talking about the possibility of a technological singularity. Which means that software will become more capable than humans ... cleverer than humans at reading all the content of the Internet and making sense out of it, cleverer than humans at writing software, writing artificial intelligence ... (Woods, 0.5 min. ff)

This may be true, and I know Mr. Wood isn't putting the discovery of the printing press and the industrial revolution "in their place," but it also doesn't appear that he is honoring their inventors as people whose work helped us get to where we are. Mr. Eden's tone in his lecture vacillates at times between alarmist and cocky.

What do we owe our machines? Why would we want to make something smart enough to destroy us in the end? If we don't want swarming drones on the warpath, if we don't want computers to run global finance or divulge our personal information or manipulate our biology or leave us jobless or secretly assess our mental health or subjugate us to a foreign power or kill us on purpose or by accident, that's our decision. I think we still want to leave the world a better place for our children and grandchildren.

The futurists are certainly right that we must develop strong monitoring systems, but we're the monitoring machines. People will continue to fly, sprint, walk, or plod forward, according to their individual styles, understanding their lives as best and happily as they can, as we've been doing since before the Stone Age. We've always been technicians. Fire, words, and symbolic inscriptions were among our first future-altering technologies, and they're still among the technologies we depend on every single day.

Neuroscience & Consciousness

Computers are dry and nonbiological. The human brain is wet and biological. And it operates throughout every part of our body. Human consciousness—looking, talking, painting, playing ball, praying—is biological. The fact that our brains spill out onto the world in the expressive ways that they do should put us in some awe.

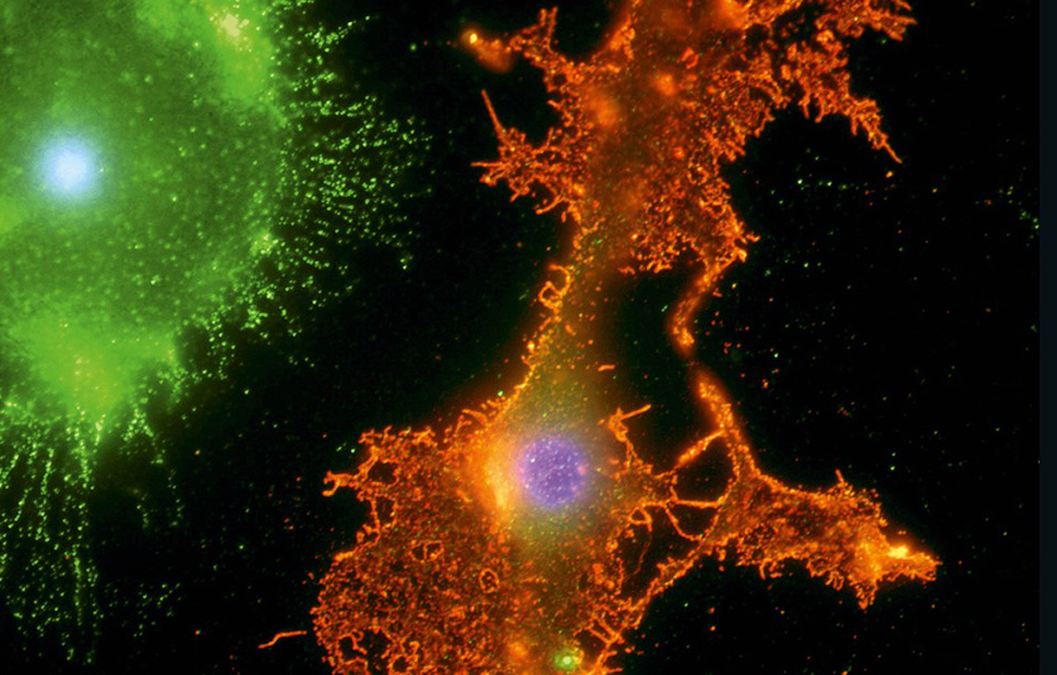

Used by permission.

Our beautiful, complex brains may or may not be the last frontier, but we have a way to go in understanding all that they are. They are the processors and interpreters of our humanity. And they break our hearts when they don't work right.

The above image of a human brain neuron was achieved with a SEM, or scanning electron microscope, which produces images using a focused beam of electrons. Neurons allow information to be received, interpreted, and relayed around the body. The long, thin structures that radiate outward from the cell body are called neurites, a general term for the process of nerve cells connecting to form a network. A neurite can be a dendrite, which receives the nerve impulses, or an axon, which transmits them.

Neurons form all of the information circuits in our brains, and they are always monitoring their activity levels. At each synapse, they process the chemical messages they receive so they can maintain a consistent level of synaptic activity and properly respond to the signaling around them.

Neurons constantly alter their DNA levels to maintain this balance and in the process mutations and slight excisions (cuts) can occur. Researchers who study mental illness and other brain dysfunctions are now working on whether some brain disorders occur because certain neurons lose their flexibility or are altered after a slight DNA excision.

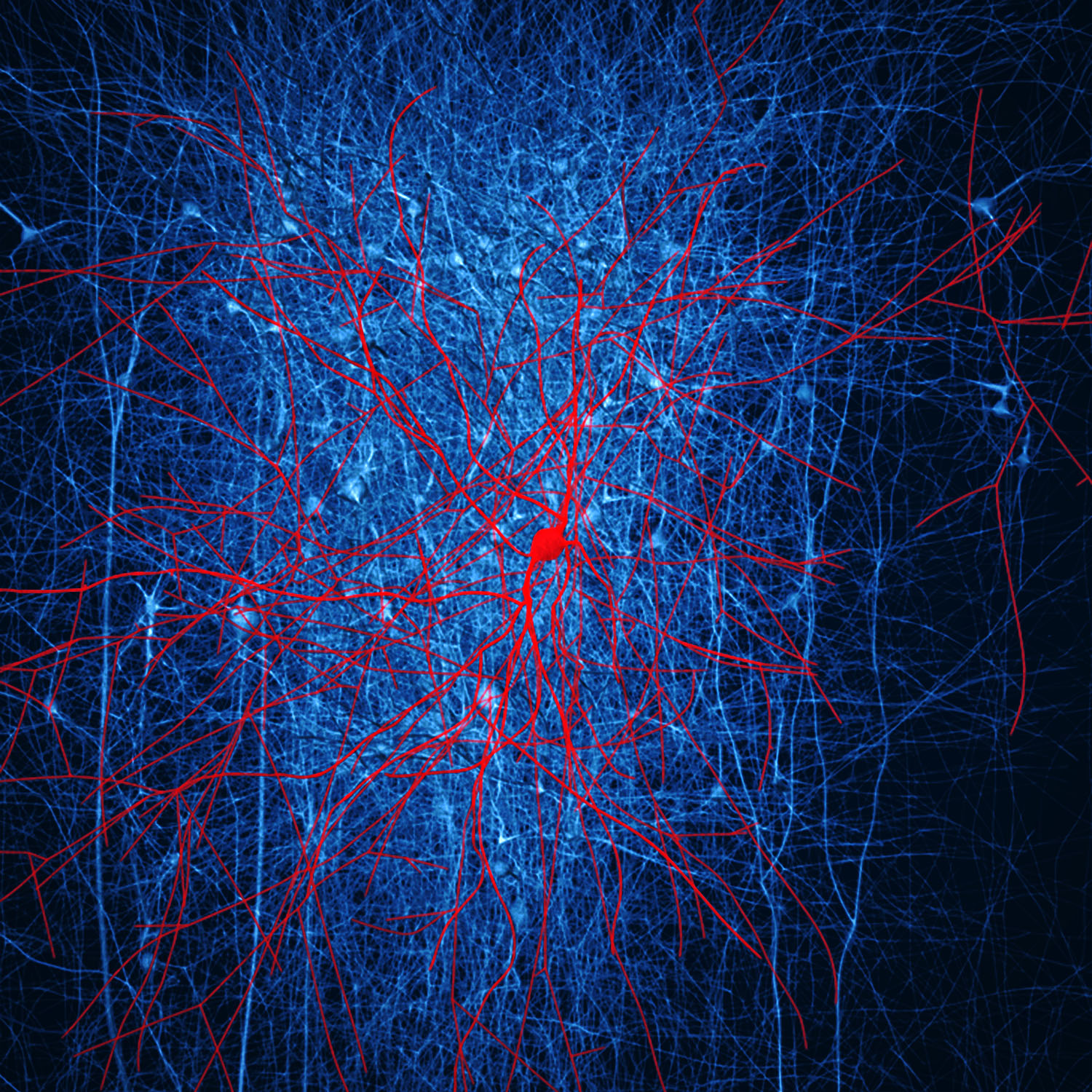

Used by permission.

The two glial cells above are a microglial cell (upper left) and an oligodendrocyte (center) from a human brain, via a flourescent light micrograph. Glial cells produce myelin; they are extremely important to brain function. The myelin coats the axons radiating from neurons, thereby enhancing more rapid communication of electrical impulses from one neuron to another. Microglia recognize and respond to areas of damage and inflammation in the central nervous system. The ragged extensions of the oligodendrocytes supply the myelin that insulates the axons.

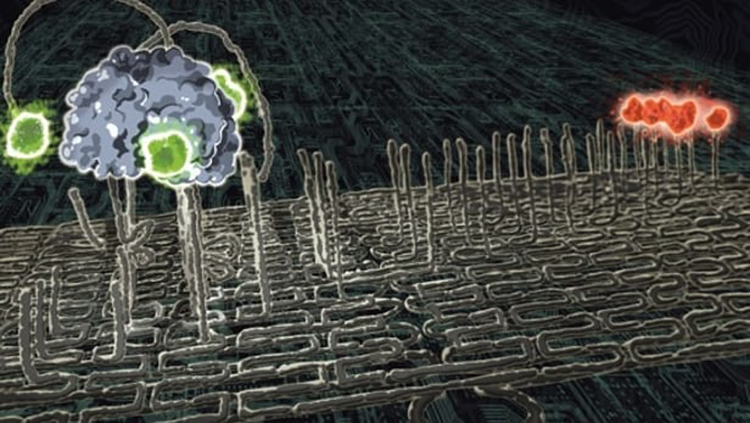

The next image is not from a human brain, but is a computer simulation made by the Blue Brain Project. This project, several years old now, has been controversial from the start. The New York Times carried an article in 2013 (Requarth, see References) that gives a general description of the Blue Brain work.

The red-colored cell in this image is known as a basket cell. It is found in the molecular layers of the cerebellum, hippocampus, and cerebral cortex and is multipolar, which means that it connects to more than one neuron. Basket cells produce gamma-amino butyric acid, or GABA, which can inhibit (like a gatekeeper shutting the gate) the presynaptic neurons (blue-colored) from receiving messages. This isn't a positive or negative effect in itself; within one human being, in one moment, these effects can work to one's advantage or disadvantage.

Rupert Goodwins, a former programmer who is now a technology journalist, calls artificial intelligence inhuman intelligence and believes that its antecedents were "the realm of the gods and spirits of ancient times." Our cultural assumptions about being ruled by AI are thus inherently embedded in us as a species, he said, echoing Carroll. To complicate matters, we now have machinery that actually does think more quickly and logically than we do. "But brain simulation is still a simulation," he says—not the real thing:

[T]he human brain is extraordinarily complex, with around 100 billion neurons and 1,000 trillion synaptic interconnections. None of this is digital; it depends on electrochemical signaling with inter-related timing and analogue components, the sort of molecular and biological machinery that we are only just starting to understand. (Goodwins, see References)

Emergence & Brain Plasticity

In The Big Picture, Sean Carroll engages the reader with his signature clarity. His description of emergence in physics seems so simple, yet emergence, like the atom, isn't just a theory. It's a naturally occurring law in macro-, micro-, and invisible environments. You don't have to be a physicist to know about it; we all experience it every day.

Emergence is also a law of neuroscience, and it underpins human creativity. Since birth we and the people around us have given our brains instructions about our individuality, just as our brains have instructed us. What happens when a convergence of neural activity leads to a particular personal experience is a property of the human brain. We're complicated.

Strong AI depends on strong amounts of learned information, but our brain does not. Our forever developing brain creates strategies for how to learn, not for the information learned. The process has nothing, nothing to do with brain size. It's all about our brain's continuous and multiplying connections and refinements with itself and its environment. It's constantly editing itself.

The neuroscientist Michael Gazzaniga, in a series of Gifford lectures at the University of Edinburgh and in his book Who's in Charge? Free Will and the Science of the Brain (see References), gives a vivid description of brain evolution and plasticity that provides the puzzle parts missing from the computational brain models. While genes determine our large-scale brain, specific connections at the local level depend on what we're doing, epigenetic factors present, and our own experience. Think of it—the local and the epigenetic—it really sets the stage for the human condition, he says (What We Are, 32:30 min. ff).

The human brain has worked complexity into its system to ensure that lots of things can happen in there at once. No one part of our brain was in charge as it evolved; no one part controls. It accommodates a complex group of interactions, inventiveness, and plenty of cognitive niches.

Gazzaniga noted that we will not duplicate the brain's capacity for "qualitative and novel circumstantial activity" via raw computational methods because the brain has its own unique organizing principle. We have evolved "an unrivaled number of highly refined abilities" via our inventive adaptability. The combination of these abilities "gives rise to additional abilities that solve general problems, leading to domain-general abilities that are uniquely human. As this process happens, the whole brain is getting rearranged. It’s not a simple addition of more and more abilities" (What We Are, 39:50 min. ff).

Gazzaniga asks in The Distributed Networks of Mind (see References): how do you get to capture this magnificence? Our brains differ from other animal brains. With our 90 or so billion neurons, only 20 billion are in the neocortex, which is responsible for thought, culture, free will, the things that define us. Does it seem odd that fewer neurons appear in the part of our brain where we consciously define ourselves?

[What is] vastly greater is the arborization of the neurons, the possibilities for connections, the arborization of the dendrites. This is one thing different in the human. ... There are unique neuronal capacities in the human that must be considered. The way the human brain has organized itself is unique (Distributed Networks, 44:00-50:00 min. ff, emphasis mine).

We don’t yet know "the trick," he says, that takes the brain from its specialized mechanisms to the “miracle” of the general domain. Trick? Miracle? This process will occupy neuroscientists for the next 200 years, he believes. The Sidney Harris cartoon below reminded me of Gazzaniga's description of the complexity of the brain's movement to the general domain.

After his first lecture, Mr. Gazzaniga was asked whether he could ever foresee a brain transplant. His blunt answer was: No. Ideas are transferred from one brain to another through books, music, discussion, and the like, not through actual transplants. The brain needs our senses to gather the information. Stem cells might help brains undergoing some degenerative type of disease, but Gazzaniga does not foresee whole-organ transplants, like we have with hearts, for example. We have to get to know ourselves better, he says; we're explanation-seekers, but explanations aren't simple.

The Brain's Left-Hemisphere Interpreter

In his lecture The Interpreter, Mr. Gazzaniga moves further into how the brain creates human consciousness. Consciousness is not a single process, and it is not a general process:

It is an emergent process that arises locally out of hundreds if not thousands of widely distributed specialized systems (modules)...

From moment to moment different modules compete for attention and the winner serves as the neural system underlying that moment of conscious experience.

We do not experience a thousand chattering voices, but a unified experience.

Consciousness flows easily and naturally from one moment to the next with a single, unified, and coherent narrative.

The psychological unity we experience emerges out of a particular specialized system called the interpreter.

The interpreter appears to be uniquely human and specialized to the left hemisphere. It is the trigger for human beliefs which constrain our brain. (The Interpreter, 49:00–1:19 min.)

I had never heard of the "interpreter," localized to our left hemisphere. But it is the part of us that raises this question: did we learn morals at our mother’s knee, or has morality evolved because it benefited us over time? Is it a bad thing to kill because religion teaches us that, or do we know innately that killing is not good for us? When a person believes that something that is wrong is right, or something that is right is wrong, most of us will think that his or her brain is impaired (This distortion is found in split-brain people.).

In a very interesting twist, Gazzaniga says that by "hijacking" the brain with virtual reality, the interpreter can get distorted or no information, an effect similar to brain damage, as you've clipped the system that cues you to what’s going on, and you could get hurt (The Interpreter, 49:00 min. ff).

Emergence in neuroscience is based on "the notion of multiple realizability, a clear philosophical concept that there are many ways to have the same output," says Gazzaniga, because the science has worked this out to be true. "There are millions of combinations of firing and transfer, thousands and thousands of settings of synapses that would produce the exact same behavior." The brain does not follow only deterministic rules.

The idea of the reality of emergence isn't new. It was started years ago by Niels Bohr and Werner Heisenberg, the latter having stated in his own Gifford lecture in 1956 that indeterminism is necessary "and not just consistently possible" (Free Yet Determined and Constrained, 31:21 min.).

With respect to the "hijacking" language above, we could argue that the futurists who dream of expanding the brain's capacity have it backwards; that a machine would necessarily be impeded by its dependence on the information it is fed, whereas the human brain is constantly responding to outer stimuli.

In case I seem hopelessly backward and out of touch with current reality, let me say I love technology. It allows me to be my own publisher, to write this article and have it read in different places around the world all on the same afternoon. I think that the biotechnology research being done, for example, at CalTech and other research centers in the search for cures for our most intractable diseases, like Parkinson's, Alzheimer's, autism, spina bifida, epilepsy, and mental illness, is the best we could hope for. I for one will want the most recent medical interventions if I ever need them.

My son is a composer and teaches music composition at a college. He tells me about the art and music created with the help of electronics. Computers can be programmed to write Bach; he sends me a link; it is impressive. The thoughtful, highly original electronic artist Camille Utterback creates visual effects that respond uniquely to each individual viewer. I have a piece of digital art created and programmed by my sister on my wall at home. The artists working with the electronics, not the computers, are the agents of creation. To "create" the Bach, the computer needed two things: the original programmer, Bach himself, and the current programmer, both of whom had the benefit of brains unrestricted by digital boundaries and in tune with the musical environments of their times. (I must say, however, that nothing replaces hearing a great cellist play Bach's solo cello suites live, in a small arena.)

Conscious & Unconscious

The singulatarians, the computer brain developers and eternal lifers, are practicing a form of displacement. They are displacing human matters onto machines that, because they are machines, cannot properly respond. Our quantum computers, our nuclear weapons, are so powerful, while much of our self-knowledge is still unconscious. This is where we need some work.

The fact is, we don't know what to do with the power of our quantum computers. If people fear being ruled by them, they have good reason. Too many of us are seduced by the new technology; we submit blindly to silly social media algorithms in a rite of being in touch with technology's power.

The Halo Effect

Because we can spill our life stories into our machines, we do (not a good reason). It may make us feel important, that halo effect, but we're not thinking through what we're submitting to. Your thoughts, your privacy are your own, but once your give them away they are literally owned by someone else, computerized, categorized, and modeled for third party purveyors.

This isn't a simple problem. Like young Narcissus, some of us are locked into a gaze that reflects back to us in the mirrors of our devices. We look into them to see who likes us, who loves us. We think we're communicating with the world but our entanglement with the mirror belies that. Our life is in fact less real, more digital, if you like that sort of thing. The following sounds like a cult promise:

Technology may allow us to attain the love we all yearn for. If we can develop non-biological beings that truly understand who we are, who we are attracted to and how [sic] we love—it is only inevitable that some of us will fall in love with them. Instead of searching for soulmates, we could create them...

AI may ultimately be able to soothe the human condition and relieve us of the existential angst of loneliness by granting access to something we all crave—the powerful desire to love and be loved. (Bidshahri, see References)

We're selling ourselves short if we look to computers as agents of understanding or recognition. We might think they "connect" us, that life in cyberspace will validate us in the world, but in fact the opposite happens. We don't get hired because we posted a ridiculous photo on Facebook or we tweeted angrily about some company or other. Our need to be validated is another one of those all-too-human pitfalls that the Internet eats up. We lose a proper sense of give and take with real people.

The HBO sci-fi series Westworld is another version of the war of the worlds. The comely robot hosts don't know that they're there simply for the amusement of their guests. The human guests, on the other hand, are anticipating the opportunity to engage in actions that would carry responsibility in the real world, but not here at the robot ranch (they're just robots, after all). The guests can do what they want. They abuse their android hosts, who after a certain amount of time start to gain consciousness and fight back. The psychologists will have fun with this series, in which we humans project our fears and desires onto anthropomorphic agents, as we have done forever, in an effort to engage or combat our shadow side.

What Kind of Data?

The tech industry, having partly lost its way, has actually become beholden to its own inventions rather than taking mastery of them. Shareholders want a good return on the massive collections of inaccurate data the industry stores. Governments, corporations, the banking and insurance industries, schools—all obsessively depend on metrics, but often they're the wrong metrics.

The over-emphasis on gathering enough data to support computer consciousness, or to solve problems, is ineffective because issues have more than cognitive parts. For the "emotional intelligence" component, the closest data input can get to all the other parts of the brain that make us human is information derived from surveys, interviews, questionnaires and the like, all of which are essentially subjective but must get answered with a yes or a no, an always to never, a 1 to 10.

What about all the things we dream about? How and why does our brain create them, and where do we store that information? How do we make it accessible? Corporations and insurance companies don't have this information in their data sets.

Your imagination, your feelings, your sense of yourself all contain an awful lot of information, more than you realize, but they're "just feelings" if you don't know from whence they come and don't know how to investigate them. You may even be cut off from your feelings, which is all the more complicated, because they're not cut off from you.

(Why did you decide to take a long drive today? Was it the weather, the condition of your car, something your grandmother said, what happened to your stock, or that you felt really bad or really good? What does feeling good mean? Does it mean you're not knowledgeable enough to feel bad? You're basically an optimist? You had a good tax return? You're feeling like your "old self" again? Why is that important? If you just had facts before you, maybe you could make an algorithm out of the facts, but you can't make an algorithm out of the emerging mental activity that accidentally or otherwise led to your decision to take a long drive today, because you're not conscious of it.)

Seems to me the truth of us is where it can't be seen.

—Richard Wagamese, Medicine Walk, p.103

My physician friend told me that often the most important part of a doctor visit is the last 30 to 60 seconds, when some concern "bubbles up" (her words) that turns out to be the patient's most important concern. Where did that come from? Not the patient's cognitive center. It came from some other storage center in the brain, influenced, perhaps, by the fact that the doctor visit is coming to an end. This isn't a simple matter of memory. We've stored information, it's memorable, and something influences us to remember it. Why couldn't we think of it ahead of time?

I sometimes wonder that the singularity and eternal life advocates are not blushing with embarrassment at the caliber of their ideas. Most of them do not have to answer to medical patients, clients, or the historical record. They’re free to expound their theories.

Technology is not the second coming of Christ, it’s powerful machines. The break-out speed of quantum computers and the possibilities of bio- and nanotechnology are only beginning their careers. But for some reason, when a new discovery is made—in science, technology, or even the field of human behavior—we impart a finality, leap to conclusions like it's the "answer" to something that we've been waiting for. Then soon enough, the new questions start arising.

Jaron Lanier

Jaron Lanier, at a TED conference in San Francisco (Lanier, see References), described how while improvising one day on the piano, his hands solved difficult harmonies that his brain couldn’t handle. What happened? The part of the brain that controls the hands was working on “a different channel than the verbal-symbolic-temporal lobe computation," he said (12 min. ff).

I’m not sure I’d call it a computation, but okay. He then asked, how far can this go? Technology could be used to “wake up” aspects "of human character" that might be functional that we don’t even know of yet. He said human character. There's a phrase not often seen in the technical literature.

Lanier acknowledged the “less conscious” as an active source of growth and creativity. But computers will never have a human consciousness or unconsciousness. Human behavior, the personal motives behind our actions, our tendency to see in others our own gifts or shadow selves, our experiential (rather than rote) encounters that open new worlds to us, are more properly the jurisdiction of psychology, literature, and other forms of unconscious exploration.

Mining Consciousness

Creative writers, for example, know how to work with the flow from unconscious to conscious. Whether they could explain it, they know it instinctively, don’t find it unusual, and depend on it. They will tell you they don’t know what their characters are going to do; they keep writing, and through the process they find out. It's an emergent phenomenon based on active work. You can learn a lot about people and yourself by reading creative fiction.

Have you ever spent hours in a group discussion about what a character in a novel is experiencing, and why? And about how much or how little the writer reveals in the effort to be true to that character? I recently finished My Name Is Lucy Barton, by Elizabeth Strout (see References). It’s relatively short, at 191 pages. The writing seems so simple, you would think you could have sat down and written that book yourself. The writer’s elegant simplicity and spareness work to cast in bold relief entire worlds of longing, sadness, and love, and an emergent experience of those states in the reader.

Creative fiction is one route to the landscape of what we might call the human condition. It doesn’t teach, it presents, makes manifest. It's not rocket science.

The novelist Karl Ove Knausgaard has written an extraordinary six-volume set of novels (My Struggle) based on his life. He has an exceptional memory, but he said in a talk one evening at the 92d Street Y in Manhattan that the process of remembering comes from the writing, not vice versa. He doesn't know what he remembers until he writes it. His work gives you a feel for what thinking, feeling, and remembering are really like, if you've forgotten; he peers into the smallest pieces of his life, takes them apart, puts them under the microscope; this is my experience, his books say. "What was consciousness, other than the surface of the soul's ocean?" he asks in Book 5. "What was consciousness other than the cone of light from the torch in the middle of a dark forest?" In a Lannan Foundation discussion with Knausgaard in the video below, the novelist Zadie Smith discusses consciousness with him.

Depth psychology isn’t considered a hard science, but it too communicates in the language of conscious and unconscious. One of a psychoanalyst’s goals is to help the client become familiar with his or her unconscious content to the extent the client is capable. The unconscious is always active, waking and sleeping. The more we learn about how it “works” on us, through our relation with the analyst and then by extension the world, the less in thrall we are to it and the more we can use it to help us.

Carl Jung, the Swiss psychiatrist and depth psychology pioneer, gave the world the terms extravert, introvert, and collective unconscious, the latter of which lies beneath (you might say) the personal unconscious. The collective unconscious calls up inherited content that might be described as an invisible field of dreams. Again, we are very complicated.

People Who Think Like Machines

When editors at the Edge website asked Haim Harari, a physicist at the Weizmann Institute of Science in Rehovot, Israel, to comment regarding whether machines can think like people, he responded, "Some prominent scientific gurus are scared by a world controlled by thinking machines. I am not sure that this is a valid fear. I am more concerned about a world led by people, who think like machines, a major emerging trend of our digital society" (Harari, see References; his emphasis).

Logic is the strong suit of the thinking machine, he says, while common sense and knowing what is meaningful is a human skill. But the difference between machine thinking and human thinking narrows when people start thinking like machines and then wind up believing, erroneously, that machines think like them. And then what?

A very smart person, reaching conclusions on the basis of one line of information, in a split second between dozens of e-mails, text messages and tweets, not to speak of other digital disturbances, is not superior to a machine with a moderate intelligence, which analyzes a large amount of relevant information before it jumps into premature conclusions and signs a public petition about a subject it is unfamiliar with. (Harari, see References)

Not only this kind of busyness, but an insistence on transparency and academic freedom for the sake of principle can actually prevent "raw thinking" and robust discussion in public circles. In a logical framework, academic freedom could work against common sense and factual knowledge, says Harari, "to teach about Noah's ark as an alternative to evolution, to deny the holocaust in teaching history or to preach for a universe created 6000 years ago (rather than 13 billions) as the basis of cosmology."

In a different essay on Edge, Technology May Endanger Democracy, Mr. Harari brings up problems that science and technology have inadvertently created in the global political, financial, and journalistic spheres. It's easy to say things like "it's not the machines we have to worry about, it's how we use them." Mr. Harari goes beyond this simple cry in the wilderness to offer a way to think about answers. He spells out a number of issues in coherent, everyday language and offers suggestions. For example, political decision-makers need strong computer knowledge, need to understand how the Internet can disseminate untruth and create political flareups that grow into large problems. I recommend going to Harari's article and reading it. Look also at this bit of fake news.

Many of the people caught up in the strong-AI and singularity debates walk slightly above ground. What do I mean? I mean they are so rich, their lives so rich, their minds so machine-buried, they really don't know what occupies the minds of the rest of the world.

Luciano Floridi has also called out singulatarian issues as rich-world concerns, "likely to worry people in leisure societies, who seem to forget what real evils are oppressing humanity and our planet, from environmental disasters to financial crises, from religious intolerance and violent terrorism to famine, poverty, ignorance, and appalling living standards, just to mention a few" (Floridi, p.9).

Conflicts, crime, and security have all been re-defined by the digital, and so has political power. In short, no aspect of our lives has remained untouched by the information revolution. As a result, we are undergoing major philosophical transformations in our views about reality, ourselves, our interactions with reality, and among ourselves. The information revolution has renewed old philosophical problems and posed new, pressing ones. (Floridi, p.10)

I thought about this when I read a recent article by Garry Kasparov, the Russian chess champion who now chairs the New York-based Human Rights Foundation. He said of his former country, "instead of believing that happy, successful people make for a successful society, socialism insists that a perfectly functioning system will produce happy individuals" (Kasparov, References). As though happiness were a top-down system.

But electronic records, of every kind and in every nook of our lives, are used to make and monitor a top-down system for decisions on things that affect us right here in our backyard. The Internet was originally celebrated for its capacity to bring ordinary people into communication with each other, around the globe (how happy that first Internet cry, "Hello world!"). It's so different now, in just a few short decades. The people who brought us this new technology had creative freedom, but less foresight; they were young and excited and never envisioned the dilemmas described by Harari, Floridi, and other scientists. Maybe we need a new Google.

References & Works Cited

[Note: This list of books and videos is partial. Also, I can no longer find the link for an excellent video I watched of Sean Carroll and a professor of physics at a Long Island university; the two had a good give and take (I'll add it if I find it again.). Any one video below will take you to a host of related videos once you hit the link.]

William S. Baring-Gould, The Art of the Limerick (Clarkson N. Potter, Inc. Publishers, 1967).

Stephen Barrett, The Dark Side of Linus Pauling's Legacy, Quackwatch, September 2014.

Raya Bidshahri, How AI Will Redefine Love, Singularity Hub, Aug. 2016.

Peter Boltuc, "First-Person Consciousness as Hardware," APA [American Philosophical Assoc.] Newsletter: Philosophy and Computers, Spring 2015.

Nick Bostrom, Superintelligence: Paths, Dangers, Strategies (UK: Oxford University Press, 2014).

Nick Bostrom, Superintelligence, Singularity Lectures, July 2016.

Sean Carroll, The Big Picture (Dutton, 2016).

Sean Carroll, The Big Picture, Google Talks, May 2016.

Sean Carroll, ScienceNET, October 2016.

Neil deGrasse Tyson & Ray Kurzweil, 2029: Singularity Year, Cosmology Today, April 2016.

Aubrey de Grey, How to Live Forever, London Real, March 2016.

Aubrey de Grey, Seeking Immortality: Aubrey de Grey at TEDx Salford,TEDx Talks March 2014.

Aubrey de Grey, A Roadmap to End Aging, TED Global 2005.

William Faulkner, Requiem for a Nun (Random House, 1951).

Richard Feynman, The Meaning of It All (Perseus Books, 1998).

Richard Feynman, The Pleasure of Finding Things Out (Perseus Books, 1999).

Luciano Floridi, "Singularitarians, AItheists, and Why the Problem With Artificial Intelligence is H.A.L. (Humans at Large), not HAL." APA Newsletter: Philosophy and Computers, Spring 2015. The text of this article is available as a pdf online.

Michael S. Gazzaniga, Who's in Charge? Free Will and the Science of the Brain (Ecco/HarperCollins, 2012).

Michael S. Gazzaniga, University of Edinburgh, Gifford Lecture Series, October 2009: The Distributed Networks of Mind; Free Yet Determined and Constrained; The Interpreter; The Social Brain; We Are the Law; What We Are

Rupert Goodwins, Debunking the biggest myths about artificial intelligence, Ars Technica, December 2015.

Haim Harari, Thinking About People Who Think Like Machines, The Edge, December 2015.

Haim Harari, Technology May Endanger Democracy, The Edge, December 2013.

James Joyce, A Portrait of the Artist as a Young Man (Viking Press, 1970). 2016 was the 100th anniversary of this book's publication. Karl Knausgaard's On Reading Portrait of the Artist as a Young Man and Colm Tóibín's James Joyce’s Portrait of the Artist, 100 Years On are fine tributes.

Garry Kasparov, "The U.S.S.R. Fell—and the World Fell Asleep," The Wall Street Journal, December 17, 2016.

Karl Ove Knausgaard, My Struggle, Books 1–6 (Farrar, Straus & Giroux, 2009-2011). See this discussion: Karl Ove Knausgaard: The Alchemist of the Ordinary.

Ray Kurzweil, Ray Kurzweil Speaks at the International Monetary Fund, Singularity Lectures, October 2016. (Kurzweil has nothing to offer in this panel; Christine Lagarde might have thought he would, but he says the same things he always says in these arenas.)

Ray Kurzweil, The Singularity Is Near (Penguin Books, 2006).

Jaron Lanier, Who Owns the Future? (Simon & Schuster, 2014).

Jaron Lanier, You Are Not a Gadget, TEDxSF, December 2010.

Alan Lightman, The Accidental Universe (Vintage Books, 2014).

Alan Lightman, The Accidental Universe, Google Talks, October 2014.

Colum McCann, Thirteen Ways of Looking (Random House, 2015).

Tim Requarth, "Bringing a Virtual Brain to Life," The New York Times, March 28, 2013.

Colin Salter, ed., Science Is Beautiful:The Human Body Under the Microscope (Batsford, B.T. Ltd., 2015).

Searle, John, "What Your Computer Can't Know," The New York Review of Books, October 9, 2014.

Elizabeth Strout, My Name Is Lucy Barton (Random House, 2016).

Nassim Nicholas Taleb, The Black Swan (Random House, 2007).

Nassim Nicholas Taleb, Antifragile (Random House, 2011).

Niklas Toivakainen, "The Moral Roots of Conceptual Confusion in Artificial Intelligence Research," APA Newsletter: Philosophy and Computers, Spring 2015.

Richard Wagamese, Medicine Walk (Canada: Milkweed Editions, 2016).

David Wood & Amnon Eden, The Singularity Controversy, London Futurists, May 2016.

Comments? Please send your responses

on the site's Contact page.Thank you!